SDS 293: Modeling for Machine Learning

Albert Y. Kim

Last updated on 2020-11-23

Schedule

Lec 29: Fri 4/17

Announcements

- PS9 lite on “linear regression via linear algebra” is now posted in the GitHub organization for this course. This is the final problem set for the course. Because it is very short, you’ll be working individually.

- Book club readings posted in the schedule above. For example Monday’s readings are Chapters 1-2. By Monday 9:30am EDT I will post a brief video summarizing my thoughts on the readings. We can then discuss the readings in

#questionson Slack.

Lec 28: Wed 4/15

Announcements

- Today is your last chance to let me know if our plan for Midterm II this Friday does not work for you. See Midterm II for more information.

- I will post a plan for our “Deep Learning” book club on Friday. See Lec27 for more information.

Chalk Talk

- First watch the screencast on “simple linear regression as matrix algebra” I’ve posted below.

- Then go over the

regression_linear_algebra.Rmdfile I will distribute on Slack. Here you will study the effects of collinearity in regression (which you learned in SDS 291 Multiple Regression), but from a linear algebra perspective.

Lec 27: Mon 4/13

Announcements

- See Midterm II details below.

- PS10 lite on Deep learning/neural nets is now cancelled. So after you submit PS9 lite on Fri 4/24 at 9pm EDT, you have completed all evaluative components of this course.

- The last two weeks will be an optional book club on Deep Learning Illustrated (see Lec24 for how to obtain free access to the eBook). Please let me know if you have ideas for the format.

- Before being thrown into a massive task, I always find it helpful to set some goals and define a purpose. This helps me stay focused and not get lost in the weeds:

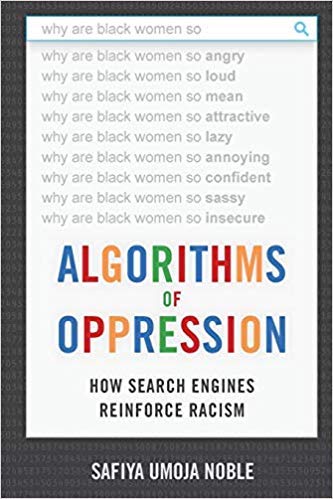

- Micro goal of book club: Understand to what researchers mean when they say deep learning is a “black box” where nobody really understands how these algorithms work. Prof. Safiya Umoja Noble stated in the podcast on “Algorithms of Oppression” from Lec18 that this lack of interpretability will be problematic for society as more of our lives becomes dependent on such algorithms.

- Macro goal of book club: To develop you into ambassadors in your communities who can explain to laypeople roughly how these algorithms work and what impact they can have if developed and implemented recklessly.

Midterm II

Here is my plan for Midterm II. However, I am open to discussion and dialogue. If any of this does not work for you, your learning environment, or your living situation, please let me know on Slack ASAP.

a) Administrative

- Open-book and no time limit. However by the Honor Code, you must complete your work individually.

- Time window:

- The midterm will be available on Moodle on Fri 4/17 at 8am EDT

- You must submit your work on Moodle before

Tue 4/21Wed 4/22 11pm EDT

- What you will need:

- A laptop to view the midterm. You do not need to print it.

- 8.5 x 11 inch blank sheets of paper where you will write your responses. Preferably solid white, but lined/grided works too.

- Some device to convert your responses to electronic format for submission on Moodle (see next point).

- Here are three ways to convert your responses to electronic format for submission on Moodle:

- If you have one, use a scanner to create a PDF. I’m assuming that most of you don’t have one.

- Use the CamScanner app on your phones to create PDF’s. I’m assuming that this will work for most of you. Note: they will be pushing you to purchase the premium version, but you don’t need to buy it. Using the freemium version will suffice.

- At last resort, take pictures with your phone and submit those.

b) Topics covered:

- You will be given an optional opportunity to re-do Midterm I Question 2. It is important for everyone in this course to master the themes in this question in particular. Feel free to discuss with your peers.

- Some form of the other questions in Midterm I may re-appear in Midterm II, so I suggest you go over Midterm I.

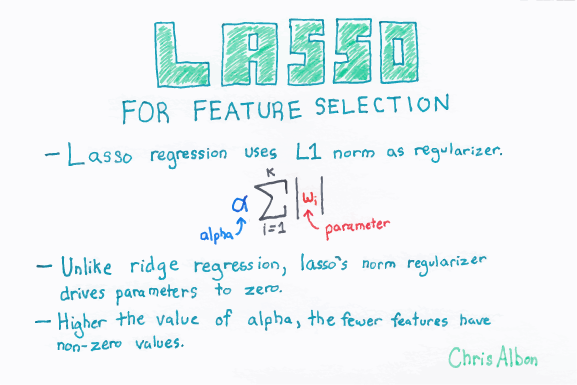

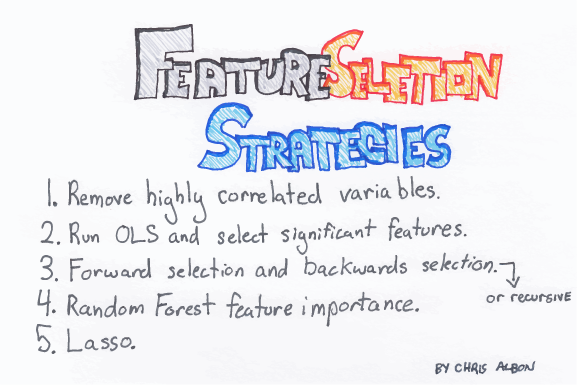

- LASSO Regularization: Lec 19-24. In particular, you should compare-and-contrast LASSO with CART. While the details of these two methods differ slightly, they are almost identical in spirit.

- Random Forests: Lec 25-26.

- PS7 on LASSO: Please save the

PS7_solutions.Rmdfile I will distribute over Slack in yourPS7RStudio Project folder and knit it.

Chalk Talk

- Note that: “Unsupervised learning” are machine learning methods where there is no outcome variable \(y\). Two of the main methods for unsupervised learning are:

- Principal Component Analysis (PCA): A method for dimension reduction of your \(p\) predictor variables to a smaller number.

- Cluster analysis: Assigning observations to “clusters” whereby within cluster differences are minimized, but between cluster differences are maximized.

- Download

PCA.Rmdandsat.csvfrom Slack and put them in your MassMutual RStudio Project and go overPCA.Rmd - Note that conveying all the theory is very difficult without doing an actual “chalk talk.” So please try to focus on the ideas in a general and qualitative sense and not get bogged down in the details:

- A basis: A set of vectors that span a space. In this case, some combination of the blue and red vectors (i.e. arrows) allow you to “reach” anywhere in the 2D space.

- An orthogonal basis: A basis where the vectors are perpendicular, i.e. form a 90 degree angle, i.e. have a dot product of 0.

- PCA as a linear transformation as represented by a matrix. In this case, the transformation is a “rotation.”

- “Eigen” is German for “its own.” In this case an eigenvalue is a number telling you how much variance there is in the data along a particular dimension. The particular dimension for a particular eigenvalue is the eigenvector.

Lec XX: Fri 4/10

Announcements

- We’ll talk about Midterm II on Lec27 on Monday.

- PS7 is due tonight at 9pm EDT.

- PS8 lite is now posted in the GitHub organization for this course:

- This PS is very quick: just fit ONE random forests model using any approach of your choice.

- However, the submission format is a little different. You will be “forking” a copy of PS8 lite and then making a “GitHub Pull Request.” Please watch this 6min video on how before you start working:

Chalk Talk

None

Lec 26: Wed 4/8

Announcements

- Let’s all catch our breath on Friday 4/10:

- No video lecture to be posted

- Please work on PS7 on LASSO

- “Office hours” format and times are set (this information has been updated in the syllabus as well):

- I will be “live answering” questions in the

#questionschannel on Slack at the following times:- MWF 9:30am - 10:30am EDT. My goal is to have lectures posted by 9:30am.

- TuTh 4:15pm - 5:00pm EDT

- If a particular question gets too involved to explain over Slack, we’ll jump into a Zoom room as needed.

- For more private discussions, please book an appointment at bit.ly/meet_with_albert. If none of the times work for you or they do not align with your timezone, please Slack me.

- I will be “live answering” questions in the

Chalk Talk

- Have the following ready:

- Save the

random_forests.Rmdfile I’ve distributed over Slack into your MassMutual RStudio Project. - Run all the code up to line 86 where we view the train data

- Have this link about what

mtrydoes open in your browser.

- Save the

- Then watch this 7m screencast

- Then go over all of

random_forests.Rmd

Lec 25: Mon 4/6

Announcements

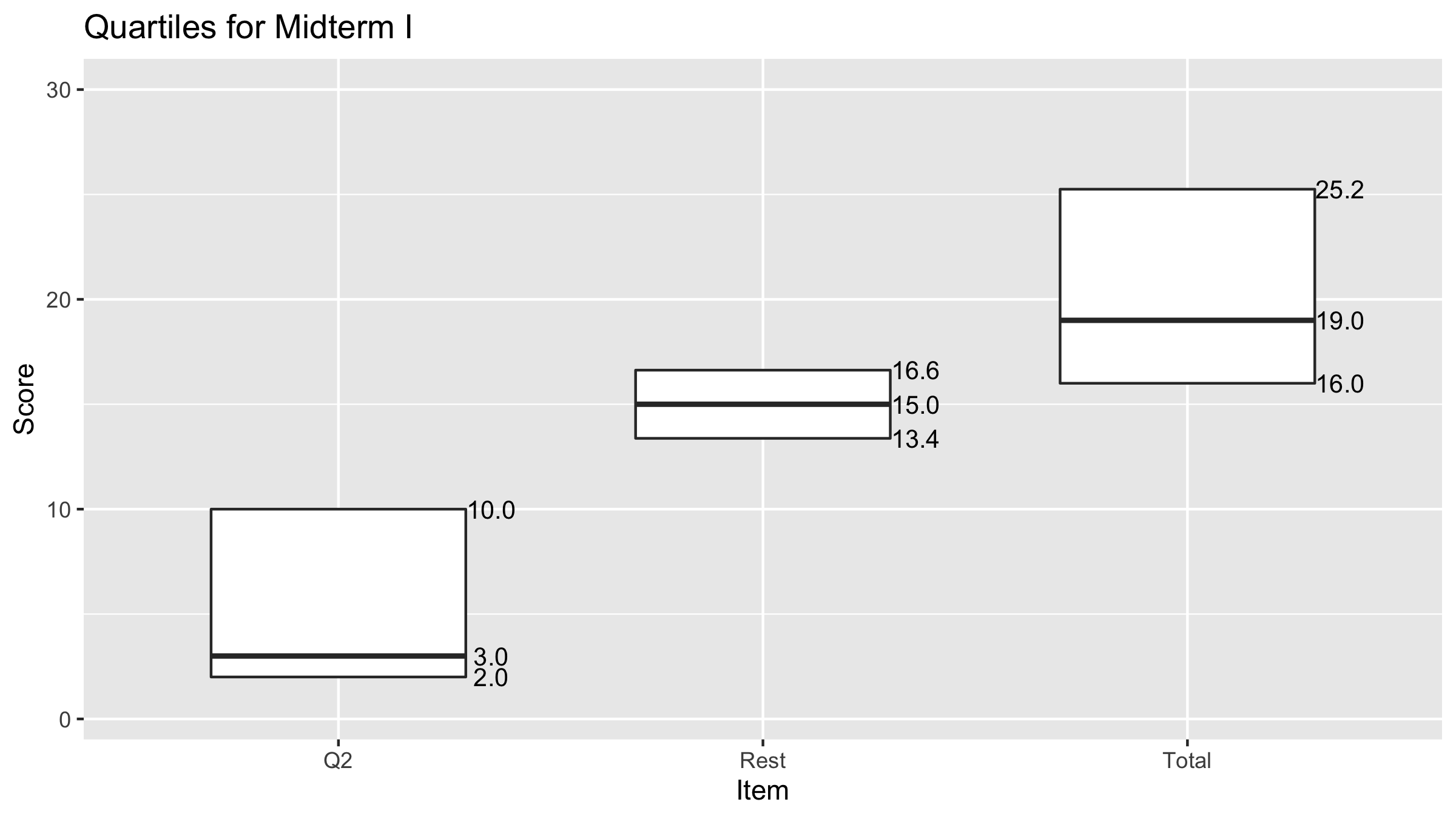

Midterm I has been graded and distributed.

- You have the option to re-do Question 2 during Midterm II to earn back full credit. That’s why there are two grades on Moodle: one for Question 2 and one for the rest.

- Distribution of scores:

- When studying for midterm II, I highly recommend you watch this screencast going over the solutions because some variation of some of the questions will likely occur for Midterm II.

Chalk Talk

- ICYMI: The “An Introduction to Statistical Learning with Applications in R” (ISLR) is a good reference to have in case you want additional explanation. You can download a PDF here. For example, today’s lecture on Random Forests is based on book page 316 (corresponding to PDF page 330).

- Have the following ready before you watch the screencast:

- In your browser, easy access to the images below

- In another tab of your browser, ModernDive Chapter 8 on Bootstrapping. In particular, the beginning of Chapter 8 until the end of section 8.2 acts as a refresher on Bootstrapping.

- MassMutual ->

LASSO.R. Load all the package then go to around line 59 where you fit a LASSO model withglmnet(..., alpha = 1, ...). - Then load the help file by running

?glmnet-> Arguments -> alpha.

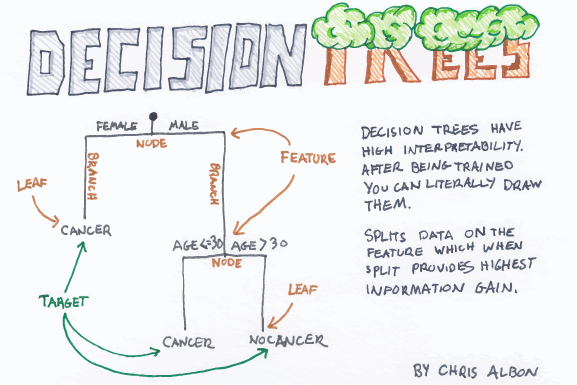

1. Closing out LASSO

A “Previously on SDS293” episode recap in images:

- Image 1: LASSO for model selection AKA variable/feature selection:

- Image 2: More on feature selection:

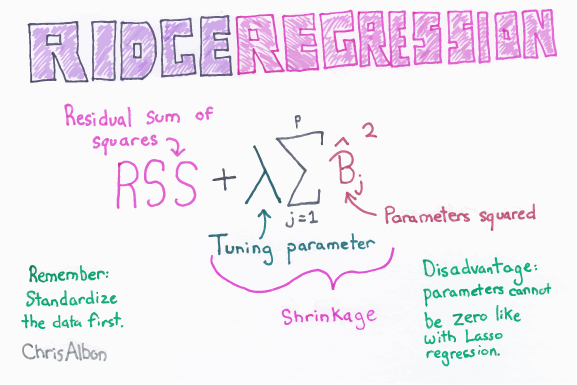

- Image 3: LASSO is almost identical to another regularization method called “ridge regression.”

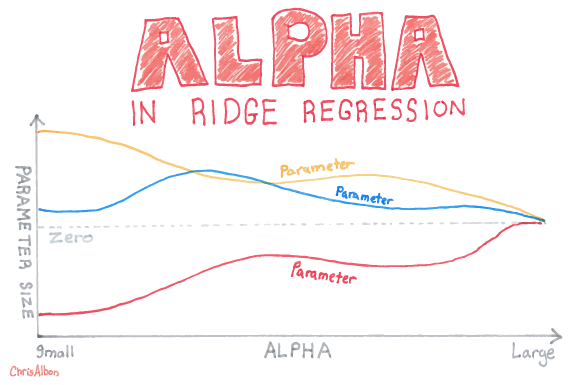

- Image 4: The \(\alpha\) you see in the \(\widehat{\beta}_j\) trajectory plot you see below is akin to \(\lambda\) in LASSO

2. Random Forests

A “Next time on SDS293” episode sneak peak in images:

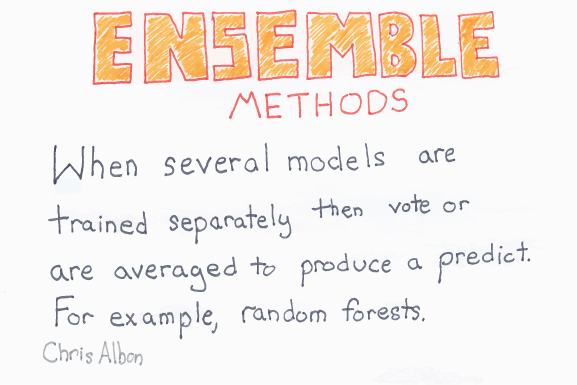

- Image 1: General class of methods called “ensemble” methods:

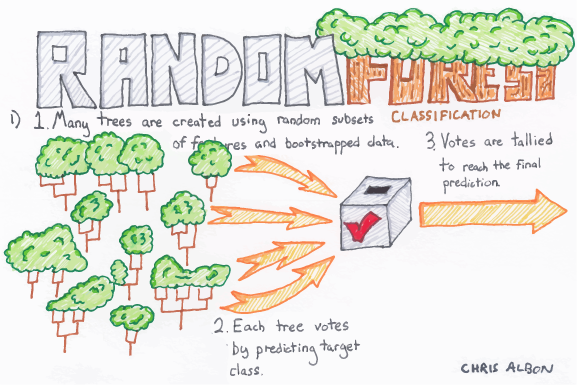

- Image 2: Random forests are one such “ensemble” method:

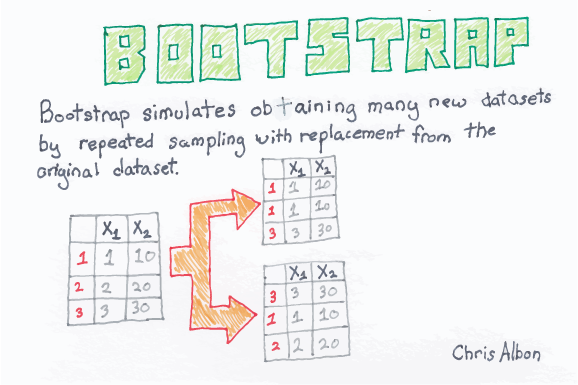

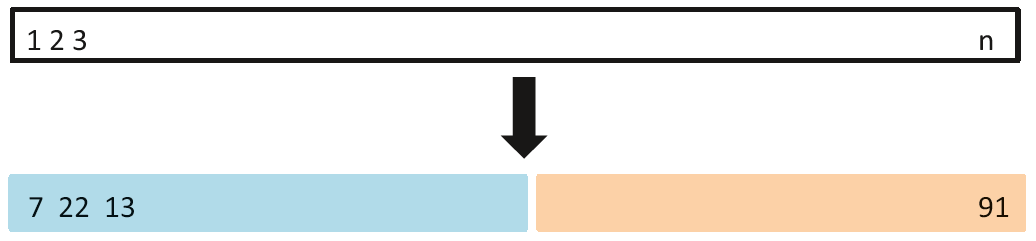

- Image 3: Bootstrap resampling with replacement:

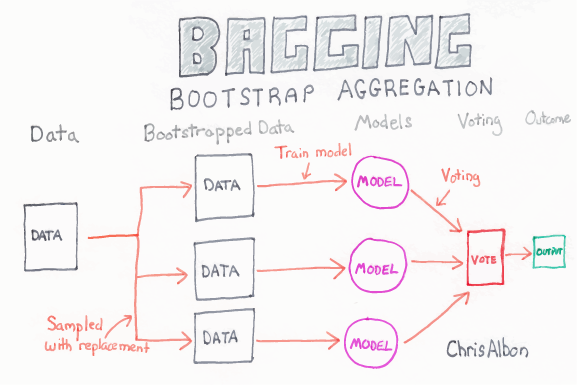

- Image 5: Bagging is another class of methods that uses bootstrapping:

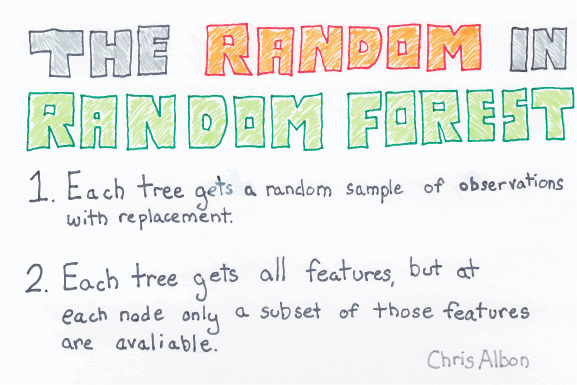

- Image 6: But wait, there’s more! Injecting a second source of variation

Lec 24: Fri 4/3

Announcements

- PS6 Quiz is now live on Moodle until Saturday 4/4 11:59pm EDT.

- Please setup access for the Deep Learning Illustrated eBook we’ll be using later in the semester:

- No video lecture today.

- PS6 is now posted in the class GitHub organization. If you want to work in groups, look at the PS7

README.mdASAP. This is an excellent opportunity to practice remote collaboration using a combination of Slack, GitHub, and Zoom. These are professional skills that I have found invaluable for my career.

Lec 23: Wed 4/1

Announcements

Chalk Talk

- Recall from Lec01 “What is Machine Learning?” slide 27, the 3 models visualized in blue in the bottom three plots:

- The left model (straight line) is very low complexity, but it really doesn’t fit the points well. The lack of fit can be measured with the residual sum of squares (RSS): the vertical distances between the black points and the blue lines, all squared, and then summed. Note the formula for RSS is the “heart” of the formula for RMSE.

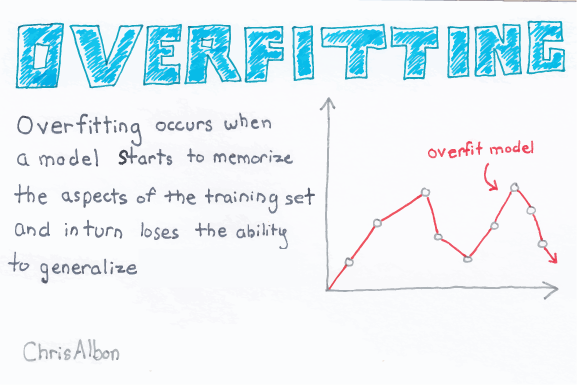

- The right model (very squiggly line) fits the data well and thus has a very small RSS, but this comes at the cost of a model with very high complexity. Accordingly, this model probably does not generalize well to other data points coming from the same population.

- The middle model (bimodal curve) is the right balance of complexity and fit.

- The theory behind LASSO:

- Go to the MassMutual RStudio Project and open

CART.RmdandLASSO.Rmdand look at the optimization formulas for both cases. - Watch the following (20m29s) video

- Go to the MassMutual RStudio Project and open

- If you have questions, ask in the

#questionsSlack channel indicating where your question is referring to. For example: “Video for Lec22.a) 10:52 Shouldn’t that be y-hat = y-bar?” - Please give any feedback about your video viewing experience in

#general. Please do not be shy with your criticisms; for remote lectures to work production values matter.

Coding exercise

- Using the same

LASSO.Rfrom Lec22, go over the remainder of the code: section 5, or roughly lines 183-295 - If you have questions, ask in the

#questionsSlack channel indicating where your question is referring to. For example: “Lec22LASSO.Raround line 142 where it saysget_LASSO_coefficients()what is that?”

Lec 22: Mon 3/30

Announcements

- If you haven’t already, please read my update from Friday 3/27 in the “COVID-19” tab above.

- Problem sets:

- We’re going to resume our problem set schedule with PS6 as originally posted under Lec18. A quiz on Moodle will be available from Friday 4/3 12:01am EDT to Saturday 4/4 11:59pm EDT

- PS7 on LASSO will be distributed as usual on Friday 4/3. More details, including groups, will come on Friday.

- Zoom office hours and appointments: Posted on the syllabus. I will post more office hours once I read over all your survey responses.

Chalk Talk

- Open your MassMutual RStudio Projects and open:

regression.RmdCART.RmdLASSO.Rmd

- Watch the following two videos. Today has a little more video content that you can expect because we need to recap.

- A recap of regression and CART (16m37s)

- Introduction to LASSO (13m05s)

- A recap of regression and CART (16m37s)

- If you have questions, ask in the

#questionsSlack channel indicating where your question is referring to. For example: “Video for Lec22.a) 10:52 Shouldn’t that be y-hat = y-bar?” (the answer is yes) - Please give any feedback about your video viewing experience in

#general. Please do not be shy with your criticisms; for remote lectures to work production values matter. For example, I already know I need to work on- Equalizing the sound better

- Stabilizing the focus of the camera

Coding exercise

- Go to

LASSO.Ron GitHub - Copy the contents of this file and save it in a file

LASSO.Rin your MassMutual RStudio Project - Go over the code up to and including section 4.d), or roughly lines 1-174

- If you have questions, ask in the

#questionsSlack channel indicating where your question is referring to. For example: “Lec22LASSO.Raround line 142 where it saysget_LASSO_coefficients()what is that?”

Lec 21: Fri 3/13

Announcements

- More COVID-19 preparations

Lec 20: Wed 3/11

Announcements

- PS06 Quiz on Friday is cancelled until things settle down

- Acknowledgement of situation

- Issue of communication

- Fill out Remote Learning Preparedness Survey

- Testing Zoom video conferencing

- Testing lecture format

- Practice run of creating meetings

Lec 19: Mon 3/9

Announcements

- Midterm I is still going on. Honor Code still applies.

Recap

- StitchFix exercise

- Slack

#generalmessage - Closing comments

- Slack

Chalk Talk

- Finishing ROC curves:

- Open MassMutual.Rproj ->

ROC.Rmd->## Background-> first code chuck wherevaluescsv is loaded. Replace its contents with what I just shared on Slack. - 4 alternative scenarios of ROC curves

- Open MassMutual.Rproj ->

- Starting LASSO: L1 regularization for adjusting model complexity of linear models

- Open MassMutual.Rproj ->

LASSO.Rmd

- Open MassMutual.Rproj ->

Lec 18: Fri 3/6

Announcements

- FYI: you will not be penalized for missing class due to illness.

- Shorter PS6: Listen to New Economics Founding 58m podcast interview of Safiya Umoja Noble, author of Algorithms of Oppression. Then on Friday 3/13:

- In-class quiz. Not meant to be difficult, rather just to ensure you actually listen to podcast.

- In-class discussion.

Recap

- Based on a decision threshold \(p^*\), contingency table for 2x2 possible truth vs prediction scenarios.

- An ROC curve is a parametric curve based on \(p^*\) onto \((x(p^*), y(p^*))\).

- The AUC is the area under the ROC curve. Always between 0 & 1.

Chalk Talk

- Go over solutions to PS5

- Different ROC curves and AUC’s for different prediction scenarios.

- In-class activity

Lec 17: Wed 3/4

Announcements

Recap

NA

Chalk Talk

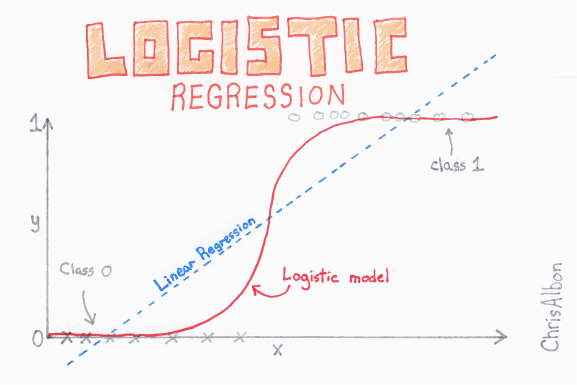

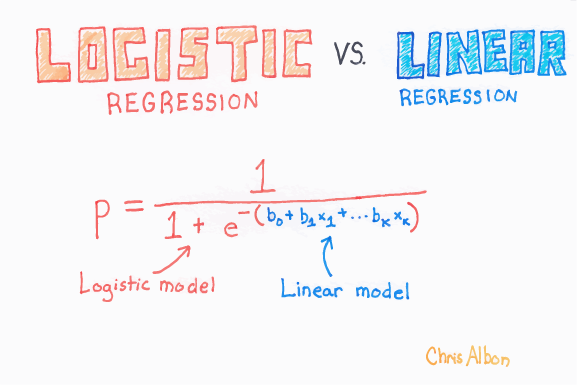

- What curve does logistic regression fit?

- Binary classification problems ROC curves:

- Decision rule for \(\widehat{y}\) based on \(\widehat{p}\)

- 2x2 contingency tables for a given threshold \(p^*\). Then based on a contingency table for a given threshold \(p^*\), some examples of table summaries include Sensitivity and Specificity

- Math theory: Parametric curve/equation

- MassMutual RStudio Project ->

ROC.RmdShiny App.

Lec 16: Mon 3/2

Announcements

- Discuss Midterm I

- PS5

Chalk Talk

- Go over MassMutual ->

logistic_regression.Rmd - Time permitting start ROC curves:

ROC.RmdShiny app

Lec 15: Fri 2/28

Announcements

- Midterm I is a Seelye self-scheduled exam from next Fri 3/6 5pm thru Mon 3/9 11pm. We’ll discuss on Monday Lec 16.

- PS5 on logistic regression assigned.

- PS6 assigned on Fri 3/6 will relate to ethics in machine learning and will not involve any coding.

- Recorded screencast of PS3 solutions: picking the “optimal” complexity paramemter \(\alpha\) via cross-validation

Chalk Talk

- In-class demo of possible approach to PS4: implementing algorithm to obtain the “optimal” complexity parameter

cpvalue using cross-validation (we will be verifying that your PS4 submissions on GitHub are all before 9am today). - Go over MassMutual ->

logistic_regression.Rmd - Time permitting start ROC curves:

ROC.RmdShiny app

Lec 14: Wed 2/26

Announcements

NA

Chalk Talk

- Step back: Recall cross-valiation is a generalization of the “validation set” idea to ensure we are not over-fitting models

- Intro to logistic regression

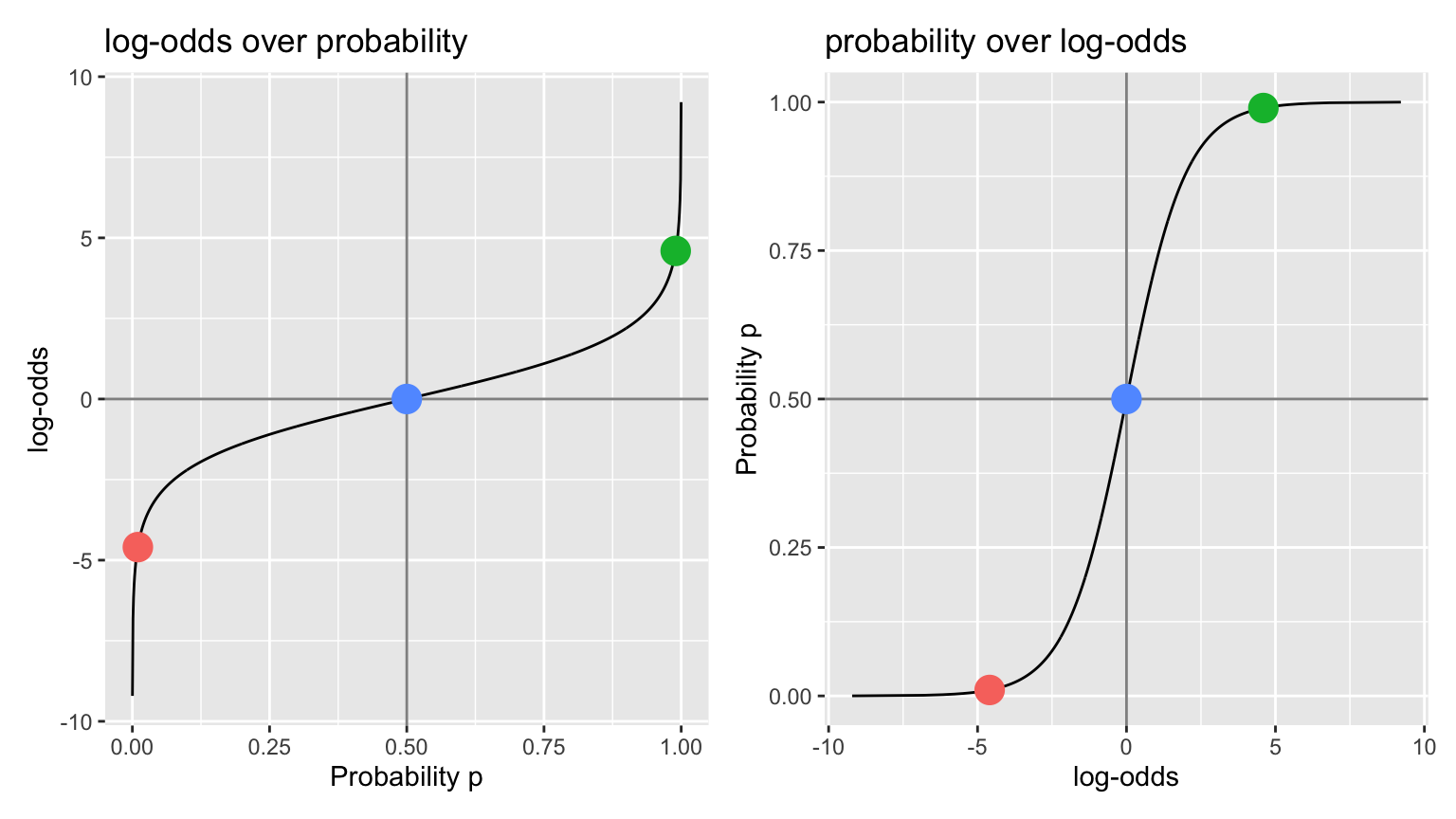

- Transforming probability space to log-odds/logit space. The following two plots are the same but with the axes flipped. In other words:

- The left plot visualizes how we map from probability space \([0,1]\) to log-odds space \((-\infty, \infty)\)

- The right plot visualizes how we map from log-odds space \((-\infty, \infty)\) back to probability space \([0,1]\)

- At 10:10, 30 minutes of paired programming for PS5 but now with other person typing.

Lec 13: Mon 2/24

Announcements

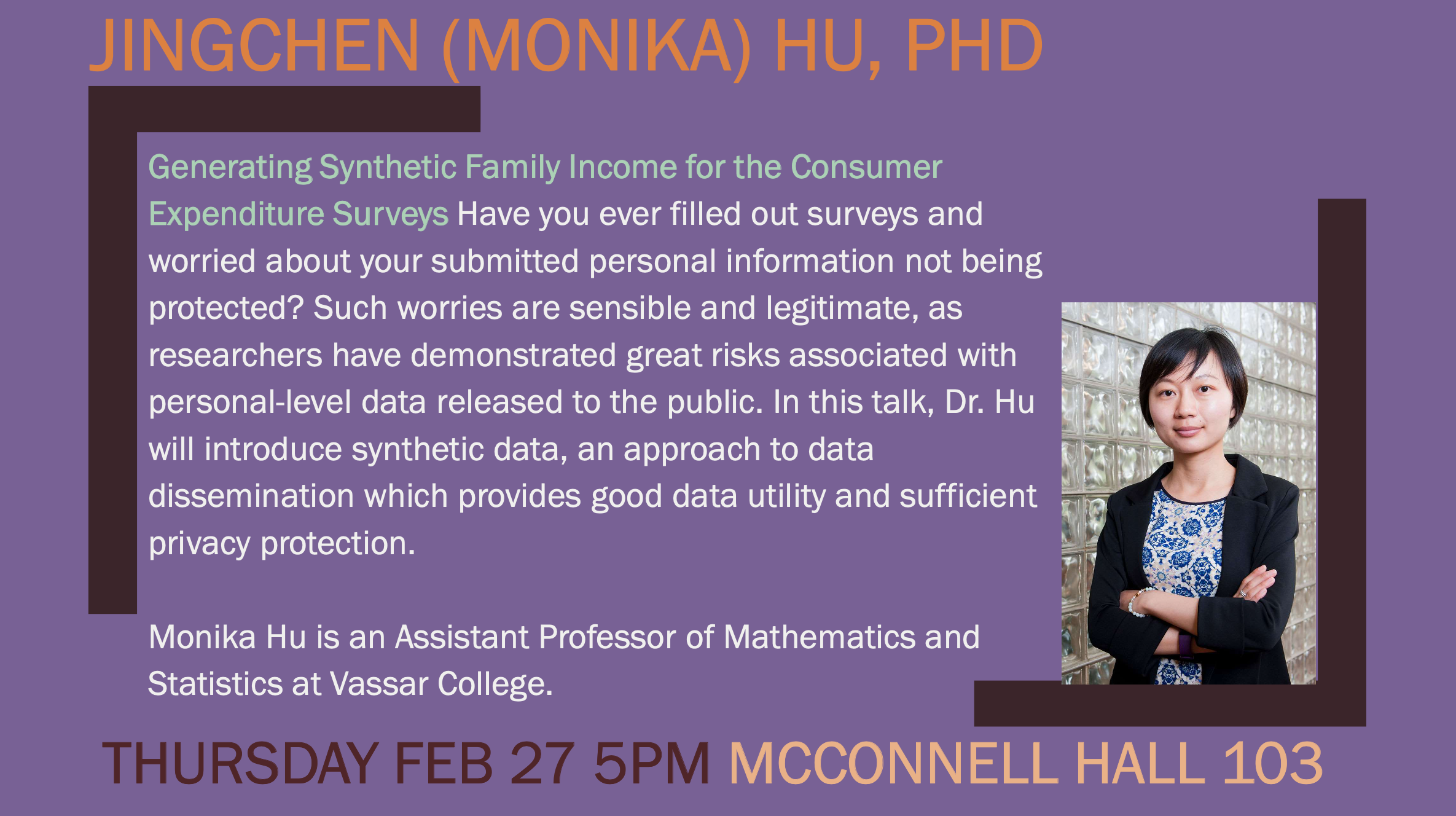

- SDS talk this week on balancing between “having useful data” and “maintinaing sufficient privacy protection”:

- What: “Generating Synthetic Family Income for the Consumer Expenditure Surveys”

- Who: Prof. Jingchen (Monika) Hu, Vassar College

- When: Thursday Feb 27, 5pm

- Where: McConnell Hall 103

Chalk Talk

- Lec01 “What is Machine Learning?” slide 28

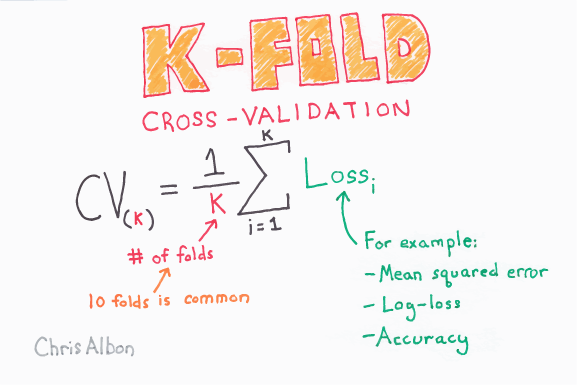

- \(k\) fold cross-validation

- Pseudocode for:

- Using crossvalidation to estimate error

- Using crossvalidation error estimates to find “optimal” level of complexity

- Using “optimal” level of complexity to make predictions

In-class Exercise

- Pair-programming for PS4

Lec 12: Fri 2/21

Announcements

NA

Topics

- In-class exercise

- Lec01 “What is Machine Learning?” slide 28

- MassMutual RStudio Project ->

coding.Rmd-># Wednesday, July 24 2019->## Cross-validation

Lec 11: Wed 2/19

Announcements

Chalk Talk

Recap of Lec10:

- Idea of “validation sets”:

- We want to know the proportion of the sampling bowl’s balls that are red. We don’t have the energy to do a census, so we take a sample. Why is important to mix the bowl first?

- Lec01 “What is Machine Learning?” slide 26 stresses importance of “validation sets” when training a self-driving car.

Today:

- Create a table comparing 3 types of RMSLE scores for two

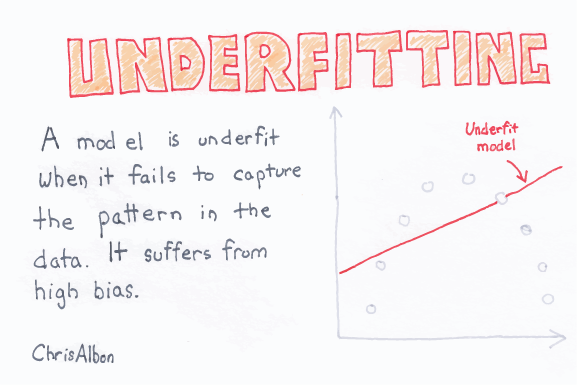

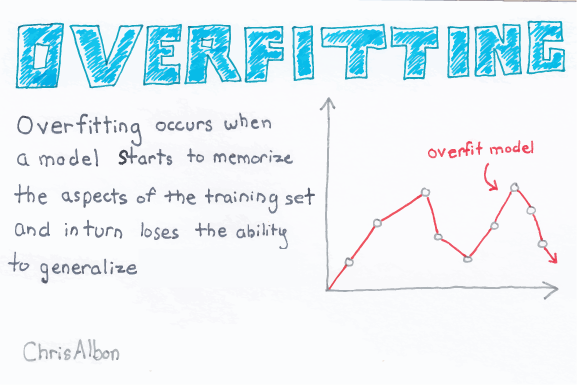

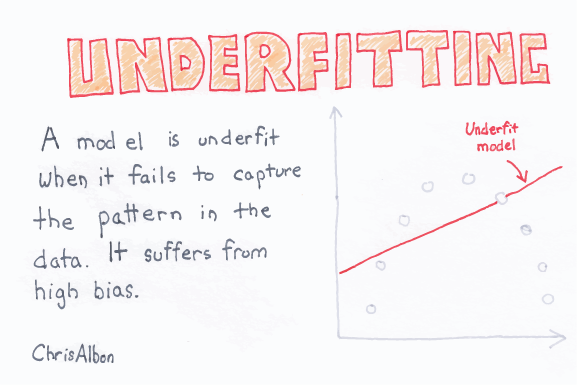

cpvalues:cp=0(relatively more complex tree) &cp=0.2(relatively less complex tree) - Comparing performance of underfit vs overfit models as a function of model complexity. See Lec01 “What is Machine Learning?” slide 27.

- How do we figure out what is “optimal” model complexity?

- In other words, the best setting of the “complexity” dial aka knob?

- For example in MassMutual RStudio Project ->

CART.Rmd-> best slider value of \(\alpha\) =cpcomplexity parameter?

Lec 10: Mon 2/17

Announcements

- Sit next to your PS3 partner

- Problem sets:

- PS3 posted

- I’m still coordinating with grader. You’ll get PS1 scores shortly.

- Great common student question: “Why are randomizing groups? Why can’t we just pick our own?”

Chalk Talk

- \(\log\)-transformations (you’ll be doing this in PS3)

- Problem: many students who used

OverallQualas a predictor, ended up with a negative prediction \(\widehat{y}\) = \(\hat{\text{SalePrice}}\) - Solution: Transforming the outcome variable space, then fitting your model, then predicting, then returning to the original variable space

- Problem: many students who used

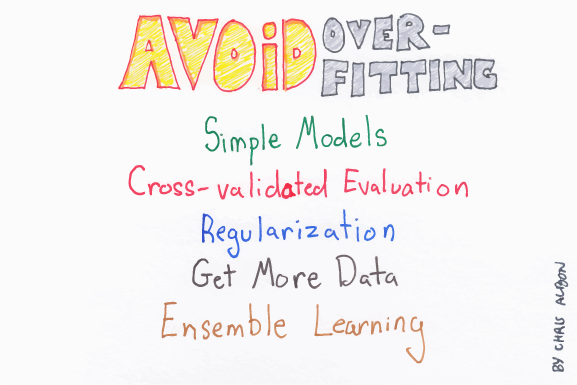

- Recap of underfitting vs overfitting:

- Why is overfitting a problem?

- Solutions to Lec09 Exercise are posted on Slack: showing the consequences on RMSLE of making predictions on separate

testdata using a model that is HELLA overfit to thetrainingdata usingcp = 0. - What are validation sets? Train your model on one set of data, but evaluate your predictions on a separate set of data. Recall Lec01 “What is Machine Learning?” slide 26 on training a self-driving car versus evaluating it’s performance.

- Solutions to Lec09 Exercise are posted on Slack: showing the consequences on RMSLE of making predictions on separate

Lec 09: Fri 2/14

Announcements

- I’m almost finished PS3. A large part of it will involve fitting a CART model to the same house prices data as in PS2.

- Check out Machine Learning Flashcards posted on Slack in

#general - Open Slack and please join the

#questionschannel. Ask all non-private questions here.

Chalk Talk

- PS2 recap

- Two TODO’s for this PS: 1) compute RMSLE and 2) submit Kaggle predictions. Where do I use

trainand where do I usetest? - RMSE vs RMSLE. What’s the difference? Recall from Lec04 our discussion on orders of magnitude.

- Two TODO’s for this PS: 1) compute RMSLE and 2) submit Kaggle predictions. Where do I use

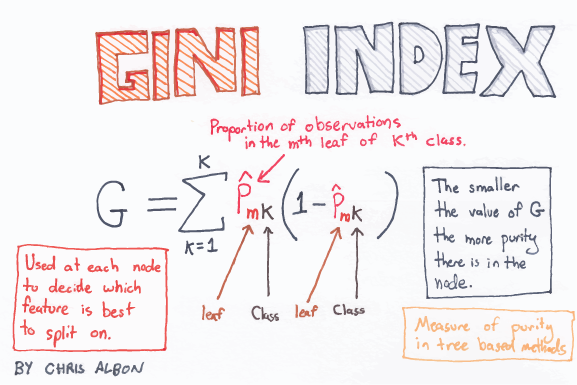

- CART wrap-up

- Open MassMutual RStudio Project ->

CART.Rmd-> Explain \(\widehat{p}_{mk}\), in particular how it plays into “Gini Index”.

- What does the equation become when \(y\) is numerical

- Tie-in CART “complexity parameter” \(\alpha\) with Lec01 “What is Machine Learning?” slide 20 on under vs overfit models.

- Open MassMutual RStudio Project ->

- Two possible model outcomes: Underfitting vs overfitting:

- Open MassMutual RStudio Project ->

coding.Rmd-># Wednesday, July 24 2019->Demonstration of overfittingmodel_CART_3corresponds to a HELLA overfit model. i.e. it doesn’t generalize.- Exercise: Fit the same model but to only half the data, predict on the other half.

Lec 08: Wed 2/12

Announcements

NA

Chalk Talk

Special cases

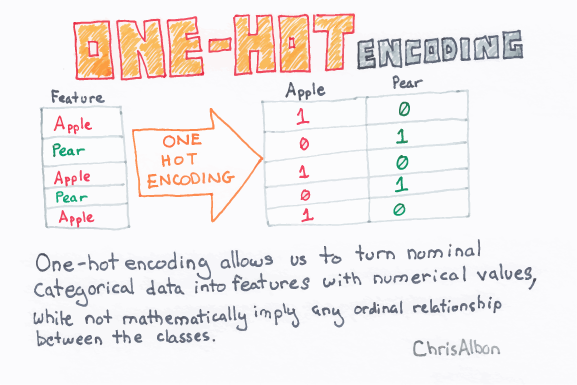

- How do I do binary splits on categorical predictors that have been one-hot encoded. Note: many statistical software packages will do this automatically for you.

- Works for both types of outcomes \(y\): numerical & categorical. Show code

What does the complexity parameter do?

Today’s chalk talk on CART is based on the Tuesday PM topics in the MassMutual Google Doc.

Open the MassMutual RStudio Project -> CART.Rmd Shiny app. (Note: after you install the necessary packages, this should knit.)

- On what variable and where along selected variable do the splits occur?

- How many splits, i.e. how far do we “grow” the tree? Or in other words, how complex do we make the tree?

Lec 07: Mon 2/10

Announcements

- Open 293 GitHub organization -> look at PS2

Chalk Talk

Today’s chalk talk on CART is based on the Tuesday PM topics in the MassMutual Google Doc.

Open the MassMutual RStudio Project:

First: Based on coding.Rmd -> Tuesday -> iris dataset. (Note: You might have to do a little debugging to get this to knit, like setting eval = TRUE for all code blocks.)

- What is classification?

- How do I interpret trees? Binary splits on predictor variables

- Go over code for CART.

Lec 06: Fri 2/7

Announcements

- PS1

- Sit next to your PS1 teammate for today’s in-class exercise

- Albert will go over some PS1 highlights

- PS2: Groups posted, but do not clone PS2 repos until I say so. It will involve:

- Creating a model with 3 numerical & 3 categorical variables

- Applying your fitted model to the training data, comparing \(y\) and \(\hat{y}\), and computing RMLSE using

mutate()

- Open syllabus: office hours calendar posted on top.

- If you haven’t already, please change your default GitHub profile picture. It doesn’t have to be a picture of you, but please put an image. This will help me quickly identify who’s who.

Chalk Talk

1. Lec05 Recap

Exercise from Lec05 -> MassMutual RStudio Project -> Tuesday -> Exercise: Submit Kaggle predictions using linear regression model.

- What issues did you encounter?

- What variables did you use? Did you not use?

2. Git merge conflicts

In-class exercise. The screencast is posted here.

- Open:

- RStudio -> PS1 RStudio Project

- GitHub organization for this class (click octocat button on top)

- Both of you, “pull” your repo to update it

- Both of you, edit the same line in

README.md, but write something different. - Both of you, commit your change but do not push it yet.

- Only one of you (call them person A), push your commit.

- Person B: Try to push your commit. You won’t be able to because you have a merge conflict. You need to resolve it.

- Person B: “Pull” your repo to bring in the merge conflict.

- Both of you, resolve the merge conflict together.

3. Ethical discussion

The iris dataset has historically been one of the most widely used datasets in statistics, first collected by Ronald A. Fisher. Type ?iris in the console and look at “Source.” While Fisher has done a lot to advance the field, some of his views were IMO problematic.

4. Classification & Regression Trees

What are classification and regression trees? Here is one example from the New York Times. Note: Smith students can get free access to the New York Times and Wall Street Journal via Smith Libraries.

Lec 05: Wed 2/5

Announcements

- Added two notes to PS1

Chalk Talk

- Multiple regression

- In MassMutual RStudio Project -> Tuesday -> Kaggle -> Exercise on Kaggle submission.

Lec 04: Mon 2/3

Announcements

- For this week’s lectures, sit with your PS1 teammate. The groups are posted on Slack under

#general - Lecture policies

- You don’t need to inform me about occasional absenses, but please give your teammate a heads up as a curtesy.

- Please do not leave in the middle lecture, unless you get prior approval from me, as this can be very distracting. After you get prior approval, please sit near the exit.

- PS1 is now ready!

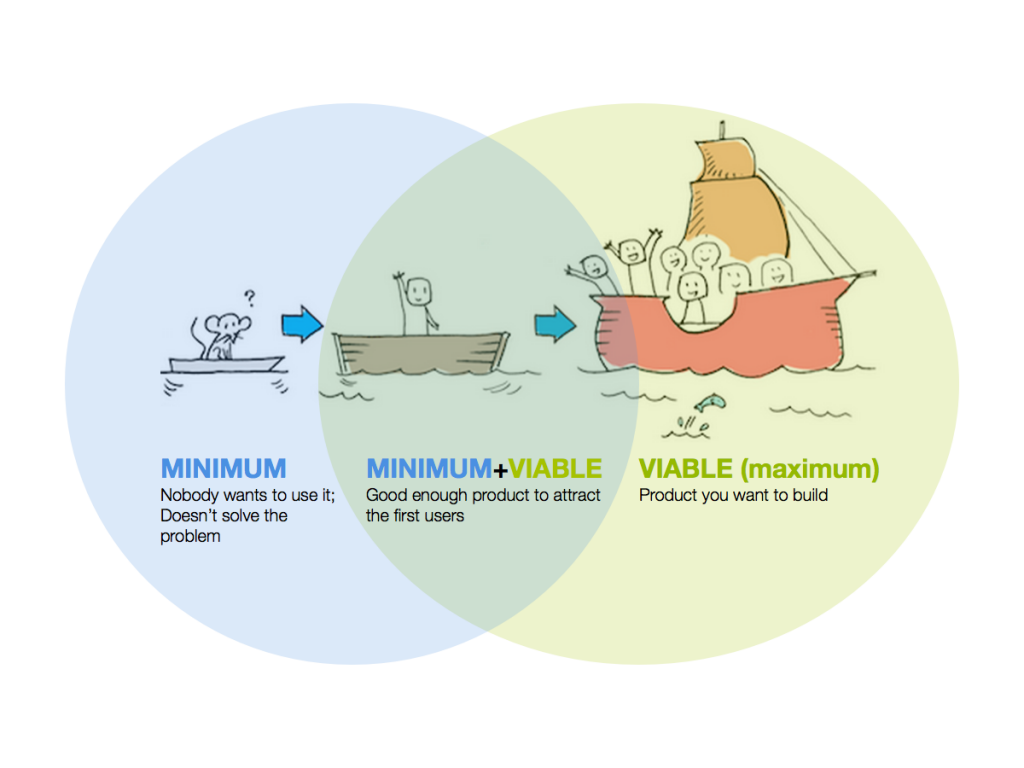

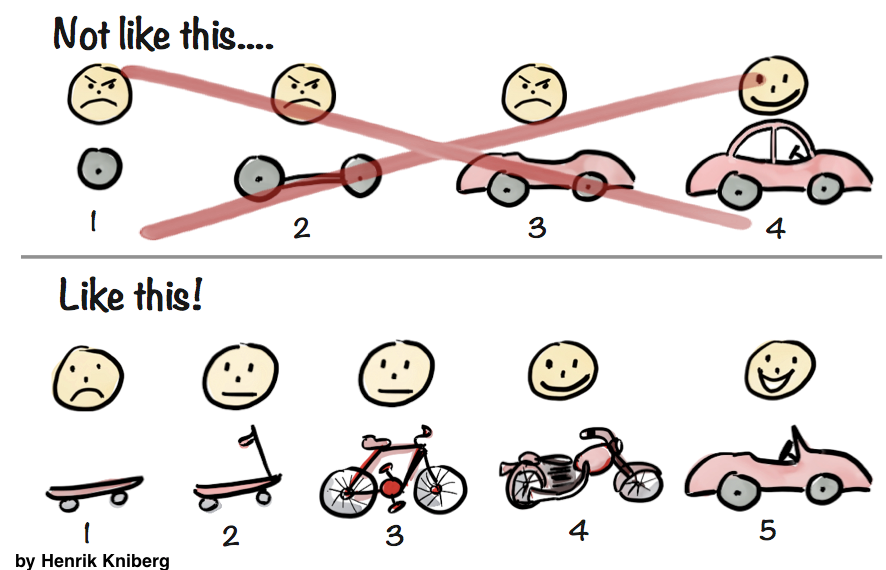

What is a minimally viable product?

When building a product, in my opinion (IMO):

- Don’t: Try to do everything completely and perfectly from the beginning. This leads perfectionism, which leads to procrastination and “analysis paralysis.”

- Do: Start by finishing a minimially viable product ASAP!

Once you’re done your MVP, iterate and improve by slowly adding complexity that work:

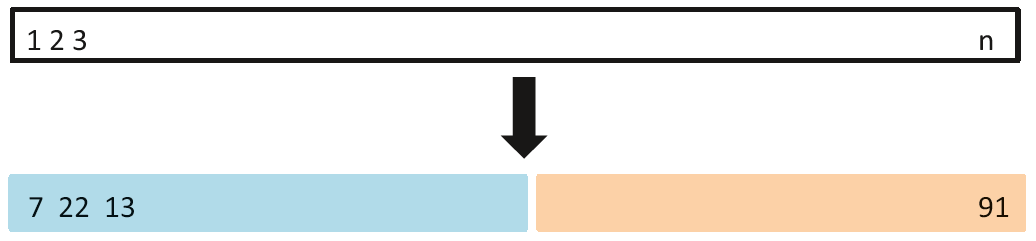

In other words:

Chalk Talk

- In-class demo

- Open

MassMutualRStudio Project ->coding.Rmd-># Tuesday, July 23 2019->## Gentle Introduction to Kaggle Competitions. Discussion on:- “Minimally viable product” model \(\widehat{y} = \overline{y}\) for all houses.

- log10-transformations:

- A discussion on orders of magnitude as well as another house prices example of a log10-transformation.

- Powers of Ten movie by Charles and Ray Eames.

Lec 03: Fri 1/31

Announcements

- GitHub organization for this class:

- Access it by clicking the “octocat” icon on the top left of this page

- Ensure you are a member (I sent an email invite yesterday)

- GitHub profile. Think of it as an extension of your resume. I highly encourage, but do not require, you to:

- Post your full name

- Post your affiliation

- Post a public-facing profile picture (it doesn’t have to be an image of you, it can be any picture).

- Please don’t change your GitHub ID’s mid-semester!

- FYI: GitHub is not without controversy

Chalk Talk

GitHub has many definitions that are unforunately not straight forward. Using fivethirtyeight R package as an example.

- Git vs GitHub

- Local vs remote

- Repos &

README.mdfiles as cover pages - Cloning a repo locally (which in our case correspond to RStudio Projects). Ex: Big green “Clone or download” button on top right.

- Commit/push and pull. Commits as units of change. Ex: Click “commits” tab on top left (currently at 523 commits)

- Branches:

masterbranch is what you see. Ex: Click “Branch” button on top left - Making contributions to

master- When on the inside: Create a new branch, make edits in branch, then make pull request

- When on the outside: Fork the repo (similar to creating a branch), make edits in your forked copye of repo, then make pull request

- Ex: Click on Insights tab on top right -> Network -> Scroll to earlier dates.

- Learning git has a steep learning curve. This is normal:

- xkcd

- Oh shit, git!

- In this class: I’ll (gently) force you to practice merge conflicts

Lec 02: Wed 1/29

Announcements

- On Sun 1/26 I sent an email including links to join Slack and a Intro Survey Google Form. If you did not receive this email, please come see me after class.

- Syllabus posted